In the 20th century, power lay in who owned the printing press. In the 21st, it lies in who controls the truth, or at least, what appears to be true. We are living in an epistemological earthquake. Every day, the line between what is real and what is manufactured grows blurrier, not from a lack of evidence but from an excess of it.

Nowhere is this crisis more acute than in the world of DeepFakes – synthetic media designed by artificial intelligence to deceive the eye, fool the ear, and undermine the very notion of authenticity. A president declares war in a video he never filmed. A CEO resigns in a voice message she never recorded. A protest is organized with documents that never existed. These scenarios are no longer science fiction; they are the playing field of our new information wars.

Enter Evo Tech, a company whose new platform, DeepFake, isn’t just trying to keep up with DeepFakes. It’s trying to outthink them.

An Architecture of Trust

To understand Evo Tech’s approach, one must think less like a tech company and more like a counterintelligence agency. At its core, DeepFake isn’t a single piece of software. It’s a system of AI agents—DF-I for images, DF-V for video, DF-A for audio, and DF-T for text—each acting like a forensic specialist. These agents don’t merely flag suspicious content; they interrogate it. They inspect the lighting in a passport photo. They analyze blink rates and jaw motion in a speech. They evaluate the breath spacing in a voicemail.

Each output is translated into a Reliability Score, a kind of lie detector calibrated not just by math, but by mission-critical thresholds. These scores are routed through dashboards, governance modules, audit logs, and scheduling tools—because in Evo Tech’s universe, verification isn’t a moment; it’s a process.

In this layered system, nothing moves forward unless it’s vetted, scored, and—if needed—challenged by a human analyst with the authority and training to make the final call.

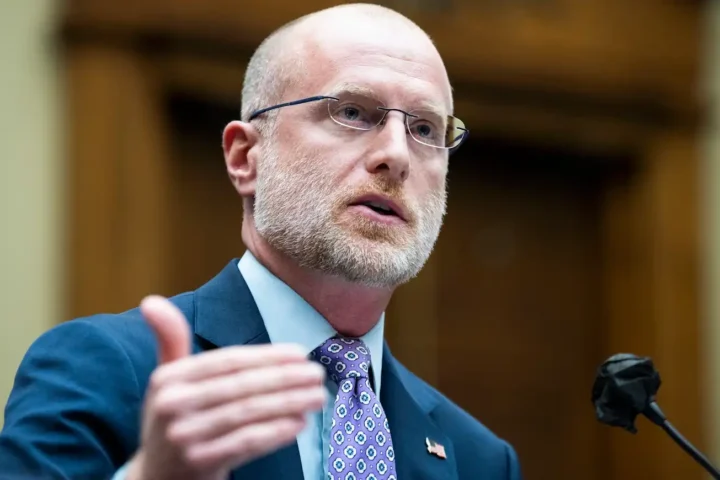

As Gene Pulera, Evo Tech’s chief architect and spokesperson, puts it: “We don’t treat DeepFake detection like a filter; we treat it like a command center.”

When Seeing Is No Longer Believing

What’s so revolutionary about Evo Tech’s platform is not that it detects DeepFake, others do that too, but that it transforms detection into decision support. Each flagged artifact becomes part of a larger case file. A fake video might connect to a known voice spoof from last month. A forged document might echo the language of a previous phishing campaign. What emerges is not just a digital fingerprint, but a behavioral pattern—a kind of criminal choreography.

This isn’t overengineering. It’s adaptation. The DeepFake market is booming. Forecasts project the synthetic media industry to surpass $33 billion by 2030. That means fakes will be better, faster, and cheaper. From espionage to fraud, sabotage to scandal, the bad actors of tomorrow won’t wear masks – they’ll wear algorithms. And they won’t need access to nuclear codes to destabilize a region. They’ll just need to upload a video at the right time, to the right audience.

Against this rising tide, DeepFake acts like a digital levee – one engineered not just to hold, but to grow stronger with every attempted breach.

The Ethics of Verification

There’s an uncomfortable truth here: we built this problem. We taught machines to mimic us, to speak like us, to render our faces in flawless resolution. Then we let them loose into a world already drowning in distrust.

But Evo Tech’s work is a reminder that the same intelligence we used to sow confusion can be repurposed to restore clarity. It’s not just about “good AI” versus “bad AI.” It’s about who sets the rules and who takes responsibility. In designing a system where every override is logged, every decision traceable, Evo Tech is not just building software. It’s building accountability.

Pulera sums it up best: “Verification is not a luxury anymore. It’s a civic infrastructure.”

From Defensive Tech to Democratic Resilience

What Evo Tech offers isn’t perfect. No system is. But what it signals is a shift, a cultural and technical commitment to slow down just enough to ask: Is this real? In a media economy obsessed with speed, DeepFake dares to prioritize accuracy. In a culture suspicious of authority, it invites transparency. And in a digital landscape where trust is in short supply, it offers, at the very least, a place to begin rebuilding it.

This matters beyond intelligence agencies or legal courts. It matters for journalists trying to verify a source. For parents wondering if a video their child saw on TikTok is real. For voters deciding what to believe on election day. It matters for anyone who still thinks truth is worth fighting for.

If the last decade was about waking up to the dangers of fake media, perhaps the next can be about reclaiming reality. And companies like Evo Tech, in their quiet, methodical way, may be doing more than just flagging DeepFakes. They may be helping us reestablish the terms of truth itself.

In a time of synthetic voices and digital shadows, that may be our most human mission yet.